In my job as product marketing director for vManager and MDV, I get to hear this discussed all the time—when will verification be done? If not asked overtly, at a minimum it’s that rattling and clanging that you just know is going on in people’s heads as they try to formulate the questions and the concerns. So I thought I would write about it, if nothing else to stir the conversation about what is known and what is not. Or perhaps more than that, as the discussion progresses to what is science and what is art, and how to navigate between the two.

I personally love this topic and here is why. Just because you can define functional and code coverage to represent doneness, it does not mean you are defining completeness, so you get lots of interesting debate. But also because of the industry we live it and the expectation that we should be able to have "sign off" in this space, just like we do in other areas of EDA. Functional verification can be and is imprecise—how many tests do you need, how much functional coverage is necessary for a high-quality design, how do we know we have a comprehensive verification plan, how many assertions should be implemented, and many more. It turns out, functional verification lives in that perfect intersection between human factors, science, and art, and as such, can only be addressed by a well-defined and prescribed methodology. You may ask why I added human factors—well because it is part art, and like any art, there is room for interpretation, there are things learned along the way, and there is lots of judgment that needs to be applied. While there are guidelines for the subjective bits, in the end—it's good old fashioned engineering and a prescriptive methodology that makes the difference between success and failure.

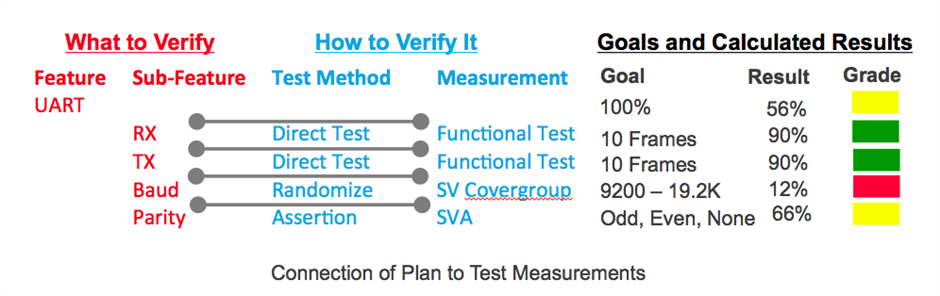

My first two years (of the nine years I have had this job) were spent asking many experts and customers alike the same question—with so many variables: How do you know when you're done? So what do we know and what works? We at Cadence tagline our methodology as Metric-Driven Verification (MDV), because you can only improve what you can clearly measure and data-driven information is the only objective measurement of completion. At the next layer down, the actual key component of closure in MDV is plan-to-closure. By explanation, the verification state space for any design is too large for the allotted time in any project. Plan-to-closure adopts and prescribes a formalized (human) planning process, which focuses on features, not RTL, and breaks features down to key functions (what to verify), then focuses on how those functions should be measured. Logically linking that plan to those functional measurement metrics allows the metric collection process to be automated. We call this the executable vPlan (verification plan). What it means is that the status of each feature can be definitively and objectively reported as compared to a goal. This is a big deal for managers who are looking for depth and visibility beyond the verbal answers. Very often mangers have to deal with subjective responses from the verification engineers, such as "No, we are not on track." This is very vague and leaves you hanging, wondering where are the problems. MDV is definitive, you know if you’re on track and you know the details of where you are not.

Image may be NSFW.

Clik here to view.

So what don’t we know? While there are clear guidelines on what to verify and how to verify a particular feature, several factors are imprecise. Planning is iterative, it grows as you learn and is constantly being adjusted to accommodate what you learn. In 2013, Cadence sponsored a panel at DVCon called “Best Practices in Verification Planning”. Indeed the consensus is that planning needs to accommodate iteration and human factors, and that failing to plan means planning to fail. The vManager planning process accommodates iteration by supporting dynamic updating of verification plans, implementing different goals for different milestones, and using automation to merge many test results to a single meaningful metric representing "doneness."

Another aspect of what we don’t precisely know is "How many metrics do we need to define?" The answer to this question is much more difficult. Is it new IP or existing IP? Is it purchased or homebuilt? Is it for a mission-critical application or designed to be a proof of concept only? These are all aspects of quality, and quality goals are different depending on end use. Like my dad always used to say, measure twice, cut once—and the same applies here. The more you measure, the less errors you are apt to make. The more metrics you use, the higher quality you will have, and coverage is a key metric because it is a leading indicator of design bugs. But "how much" is the art part, because only you know the critical aspects of your design and what is essential to be verified, and what is not. Here, this is no substitution for a really good verification engineer using proven principals of functional verification, and implementing MDV to automate and measure your progress.

Which leaves me to my last topic—signoff. Is there such a thing in functional verification? Stay tuned for future blogs, I at least have an opinion.

Any questions or comments? Email us at mdv@cadence.com.

John Brennan

Image may be NSFW.Clik here to view.

Image may be NSFW.

Image may be NSFW.Clik here to view.